ollama launch; Sightline; Kitty Cards

As promised on Mastodon, we’ve got a review/take on “ollama launch”, followed by a bonkers cool physical OSINT tool using OSM data, and a short intro to a resource that lets us make our own Apple Wallet cards.

TL;DR

(This is an LLM/GPT-generated summary of today’s Drop. Ollama + MinMax M2.1.)

- Ollama’s new “launch” feature allows Claude Code to work with local models by making Ollama’s server API-compatible with Anthropic, though local models still lag behind frontier systems and the pricing structure suggests a funnel toward their cloud service (https://ollama.com/blog/launch).

- Sightline bridges the gap between OpenStreetMap data and findable infrastructure assets with a dual query system supporting natural language and structured syntax for searching 100+ categories like telecom towers, power plants, and airports (https://sightline-maps.vercel.app/).

- Kitty Cards offers a simple way to create custom Apple Wallet cards, providing a well-implemented tool for generating personalized digital wallet passes (https://kitty.cards/).

ollama launch

I feel compelled to implore you to visit ”Dear Vibecoder — Talks With Opus“_ before or after this section, especially since the section is about LLM-assisted coding.

Ollama dropped a new feature this week called “ollama launch” that lets us point coding agents like Claude Code at local models or any API compatible own model infrastructure instead of the original provider’s API. On the surface it looks like a neat quality-of-life improvement for us local-first crowd. One command, no fiddling with environment variables, and suddenly Claude Code is talking to whatever model we want. If we peek behind the curtain, though, the Wizard’s true form shows, and the picture gets a wee bit more interesting.

What Ollama actually did was modify their server to speak the same API dialect as Anthropic, so when Claude Code makes a request thinking it’s talking to Anthropic’s servers, Ollama intercepts it and routes the call to a local model or one of their hosted cloud models instead. The “launch” command is essentially a convenience wrapper that sets the right environment variables for you. You can see this in their documentation where they spell out the manual setup: export ANTHROPIC_AUTH_TOKEN=ollama, blank out the API key, and point the base URL at localhost, so there’s nothing magical (or cool) happening under the hood.

The marketing pitch leans hard on the usual local-first talking points: Keep your data private! Run models on your own hardware! Democratize AI! These are legit concerns for plenty of developers, especially those working with sensitive codebases or in regulated industries. But there’s a catch that Ollama’s announcement glosses over, which is that local models still lag behind the frontier systems from Anthropic, OpenAI, Google, etc. by a meaningful margin. The recommended local models for coding work, things like glm-4.7-flash and qwen3-coder, require around 23 GB of VRAM and a 64,000 token context window to function properly. Even with that hardware investment, you’re not getting Claude-level reasoning. The local model experience is improving, and there’s genuine momentum toward capable models that run efficiently on consumer hardware and even CPU, but we’re not there yet. Oh, and, let’s make sure to mention that our ability to build larger systems to work with larger models has hit a pretty hard setback with the increase in component prices thanks to the AI giants.

This is where Ollama Cloud enters the picture, and probably where the real business strategy lives. Alongside the launch feature, Ollama rolled out a tiered pricing structure with a Max plan at $100 per month that promises 20x more cloud model usage than the $20 Pro tier. The pitch is extended coding sessions up to 5 hours and access to larger hosted models. If local models were already competitive with Claude, why would anyone pay $100 a month for Ollama’s cloud? They wouldn’t. The local-first messaging is aspirational, but the revenue model depends on users discovering that local isn’t quite good enough and then upgrading to cloud. So, let’s call it a funnel disguised as a philosophy.

That said, the feature does open up interesting possibilities for experimentation. You can now use Claude Code’s interface and tooling, which is genuinely well-designed, with whatever backend model you want. (I tried it on some tasks and it works great.) That has value for testing, for development workflows where absolute quality matters less than iteration speed, and for the eventual future where local models catch up. The tooling landscape benefits when the interface layer separates from the model layer.

It’s worth noting that Anthropic, OpenAI, and the other major providers aren’t profitable right now. They’re burning through capital to build market share and hoping the economics work out eventually. If Ollama’s approach starts pulling meaningful revenue away from these companies, expect them to respond. API compatibility isn’t a full operational contract, and a provider could introduce authentication changes, modify their API spec in ways that break third-party compatibility layers, or implement technical measures that make this kind of redirection harder to maintain. The current situation where Ollama can seamlessly impersonate Anthropic’s API exists because Anthropic either hasn’t noticed, doesn’t care yet (I mean, it’s only been ~2 days, so give them time?), or sees some strategic benefit in letting it continue. Any of those conditions could change.

So where does that leave things? Ollama launch is a proper feature that works and makes certain workflows easier. The democratization angle is half-true at best given the current state of local models. The pricing structure suggests Ollama is betting you’ll try local, find it wanting, and pay them instead of Anthropic for cloud inference. And the whole arrangement sits on a foundation that the major providers could undermine whenever they decide to. So, use it if it fits your workflow (I am/will be), but go in with your eyes open about what game is actually being played.

Sightline

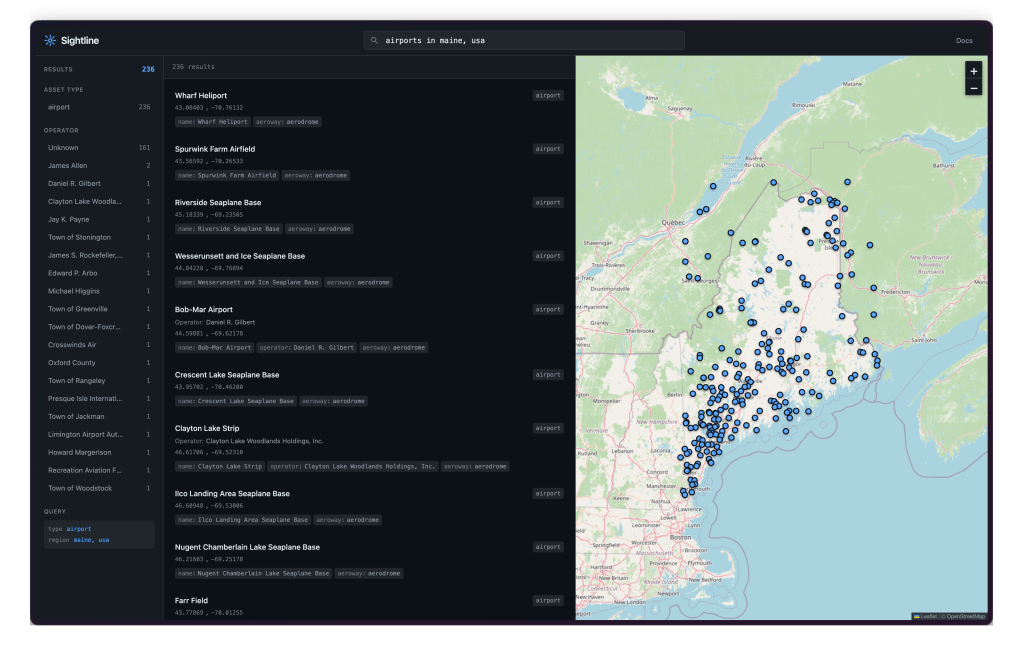

There’s a gap between “data exists in OpenStreetMap” and “I can actually find what I’m looking for,” which Nisarga Adhikary’s Sightline (GH) bridges with a clean query interface for infrastructure assets (i.e., telecom towers, substations, refineries, data centers, ports, the works).

It worked for me on the first try due to the snazzy assisted-prompt feature of the input box. There’s actually a dual query system: you can go natural language (“power plants near mumbai”) and let the natural language processing (NLP) parser figure out what you mean; or, drop into structured syntax when you need precision (e.g., “type:nuclear region:bavaria” or “type:data_center operator:amazon country:ireland”). The structured approach supports filtering by operator, country, region, and proximity radius, which gets you past the “show me everything vaguely matching this keyword” problem that plagues most map searches.

That “airports in maine” query result in the section header was instantaneous and is accurate as far as every dot below mid-Maine is concerned (I’ve been around a large portion of that part of Maine).

Under the hood, it’s Nominatim for geocoding and Overpass API for pulling OSM data. The architecture is clean and clear, with parser.ts handling the query interpretation, geo.ts managing the Nominatim calls, and overpass.ts building the actual Overpass queries. It even has an in-memory cache to avoid hammering the public APIs. That means there’s no need to bring API keys along for the ride and no environment variables to worry about. Oh, did I forget to mention that it’s self-hostable?

Supported asset types cover 100+ categories spanning energy (solar, wind, nuclear, coal, hydro, geothermal), telecommunications, oil and gas infrastructure, water utilities, aviation, maritime, rail, industrial facilities, military installations, and government buildings. You can query for hospitals or prisons or embassies or EV charging stations (or airports) with equal ease.

I am required by law to note that OSM data varies wildly in completeness depending on where you’re looking (European coverage tends to be better than, say, rural Africa), but as a reconnaissance tool or starting point for infrastructure research, this does the job. Now to see if it knows about any Flock camera installations.

Kitty Cards

We’ll keep this short given that the first two sections are tome-ish.

Álvaro Ramírez has a short post up about Kitty Cards, a super-simple way to create our own Apple Wallet cards.

No more blather from me, save for noting that it’s a great idea and implemented well.

FIN

Remember, you can follow and interact with the full text of The Daily Drop’s free posts on:

- 🐘 Mastodon via

@dailydrop.hrbrmstr.dev@dailydrop.hrbrmstr.dev - 🦋 Bluesky via

https://bsky.app/profile/dailydrop.hrbrmstr.dev.web.brid.gy

☮️

Leave a comment