Postman’s MCP Factory; The M in “MCP” Stands for Malicious; Natural Language Web (NLWeb)

I built up a few issues completely sans “AI”, knowing I’d be doing this one. I’d suggest even the AI-contrarians at least read the last section, because I think it’g going to sneak up on us all if we aren’t paying attention to what Microsoft is trying to do to the web; and, that has some fairly stark implications.

TL;DR

(This is an LLM/GPT-generated summary of today’s Drop using Ollama + Qwen 3 and a custom prompt.)

- Postman’s MCP Factory provides a straightforward way to generate MCP servers, but they lack the nuanced intelligence needed for powerful LLM agent integrations (https://www.postman.com/explore/mcp-generator)

- The M in “MCP” Stands for Malicious highlights security concerns with MCP servers, revealing that nearly 8% of analyzed servers showed signs of malicious intent or critical vulnerabilities (https://blog.virustotal.com/2025/06/what-17845-github-repos-taught-us-about.html)

- Natural Language Web (NLWeb) introduces a protocol that allows AI agents to query websites in natural language, but it raises concerns about the web’s future as a machine-first architecture (https://github.com/microsoft/NLWeb)

Postman’s MCP Factory

Generated MCP servers work, but they’re not intelligent.

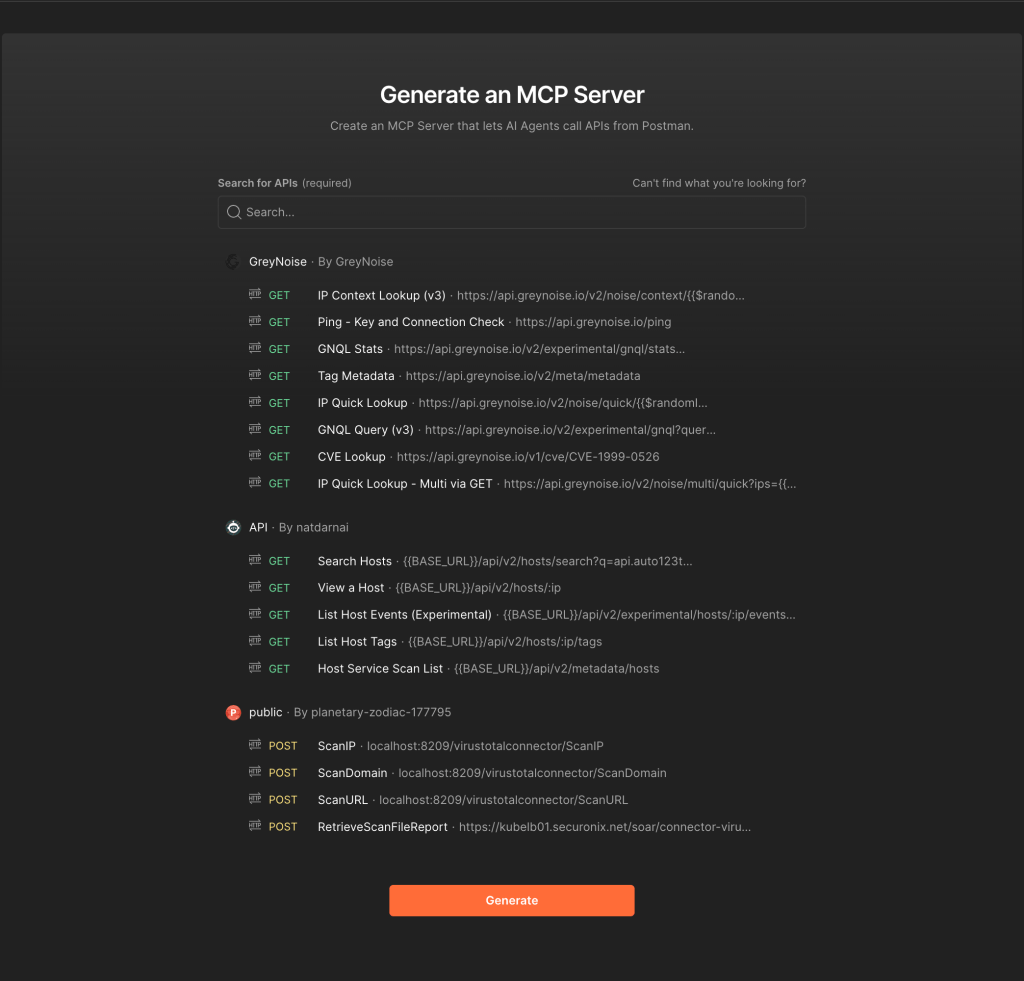

Postman’s new LLM/GPT Tool Builder makes creating Model Context Protocol (MCP) servers surprisingly straightforward. Their approach is elegantly simple: select your API collection, hit generate, and receive a clean TypeScript MCP server ready to bridge your services with LLM systems. The generated code follows MCP standards precisely, includes proper documentation, and runs locally with minimal setup, including a Docker container.

Giovanni Cocco actually beat Postman to this concept by a few months with giovannicocco/mcp-server-postman-tool-generation, but Postman’s implementation is now the more polished solution.

But here’s the thing about generated code: it’s a starting point, not a destination.

The default MCP server generation creates basic functionality. You get API endpoint exposure and standard request handling, but you’re missing the nuanced intelligence that makes LLM agent integrations truly powerful.

Generated servers typically have three critical gaps. First, they expose raw endpoints without contextual helpers. Your agent can call an endpoint, but it can’t easily compose operations, validate complex workflows, or understand relationships between different API calls. Second, their responses aren’t optimized for AI consumption. JSON fields that make sense to developers don’t necessarily translate well to language model reasoning. You need structured, semantic output that provides context alongside data. Third, they include sparse tool descriptions that tell AI systems what a tool does, but not when, why, or how to use it effectively.

Effective MCP servers require strategic enhancement across each of these areas.

Create composite functions that encode business logic. Instead of simply exposing GET /users/{id}, build functions like getUserWithContext() that aggregate user data, recent activity, and relevant permissions in a single call. For inventory APIs, wrap basic endpoints with operations like checkStockAndReorder() or analyzeInventoryTrends(). AI agents excel when they can accomplish complex tasks through fewer, more intelligent operations.

Transform responses into narrative structures. Raw database outputs don’t help language models make decisions. Instead of returning { "status": "active", "last_login": "2024-06-01" }, provide { "user_status": "Currently active with recent login on June 1st, indicating regular engagement" }. This isn’t just formatting—it’s creating outputs that naturally flow into AI reasoning processes. Think of your MCP server as a translator between structured data and semantic understanding.

Write tool descriptions like expert guidance. Instead of “Updates user profile information,” try “Modifies user account details. Use this when callers request changes to personal information, preferences, or settings. Requires user ID and specific fields to update. Best used after confirming current user state.” Include usage patterns, common combinations with other tools, and even failure scenarios. Help the AI understand not just the mechanics of your tool, but the strategy of using it effectively.

We’re witnessing something remarkable in the intersection of API design and AI capabilities. MCP isn’t just a protocol—it’s a new way of thinking about how software systems communicate intent and capability.

The most effective MCP servers don’t just expose functionality; they encode expertise. They understand that AI agents need guidance, context, and semantic richness to make intelligent decisions.

We’re in the early stages of a fundamental shift in API interface design. The old paradigm of “expose everything and let the client figure it out” is giving way to “provide intelligent, contextual operations that encode domain knowledge.”

Postman’s Tool Builder provides an excellent foundation, but the real innovation happens in the enhancement phase. The servers that will define this space won’t just connect APIs to AI—they’ll bridge the gap between raw functionality and intelligent automation.

Start with generated code, but don’t stop there. Build servers that think, explain, and guide. Create composite operations that understand your domain. Write descriptions that teach rather than just describe. Transform your outputs into semantic narratives that AI systems can reason with effectively.

The AI agents of tomorrow will remember which tools made their job easier, and which ones just got in the way. That’s the kind of developer experience worth building toward.

The M in “MCP” Stands for Malicious

The Model Context Protocol (MCP) has gone from experimental standard to “infrastructure backbone” in just a few months. Itenables LLMs to seamlessly interact with external tools and data sources through clean JSON-RPC interfaces. But as VirusTotal’s latest research reveals, this rapid adoption comes with a sobering reality check: nearly 8% of 17,845 analyzed MCP servers showed signs of malicious intent or critical vulnerabilities.

This isn’t just another “AI security concern” headline. This is a window into how quickly we can accumulate security debt when innovation moves at AI speed.

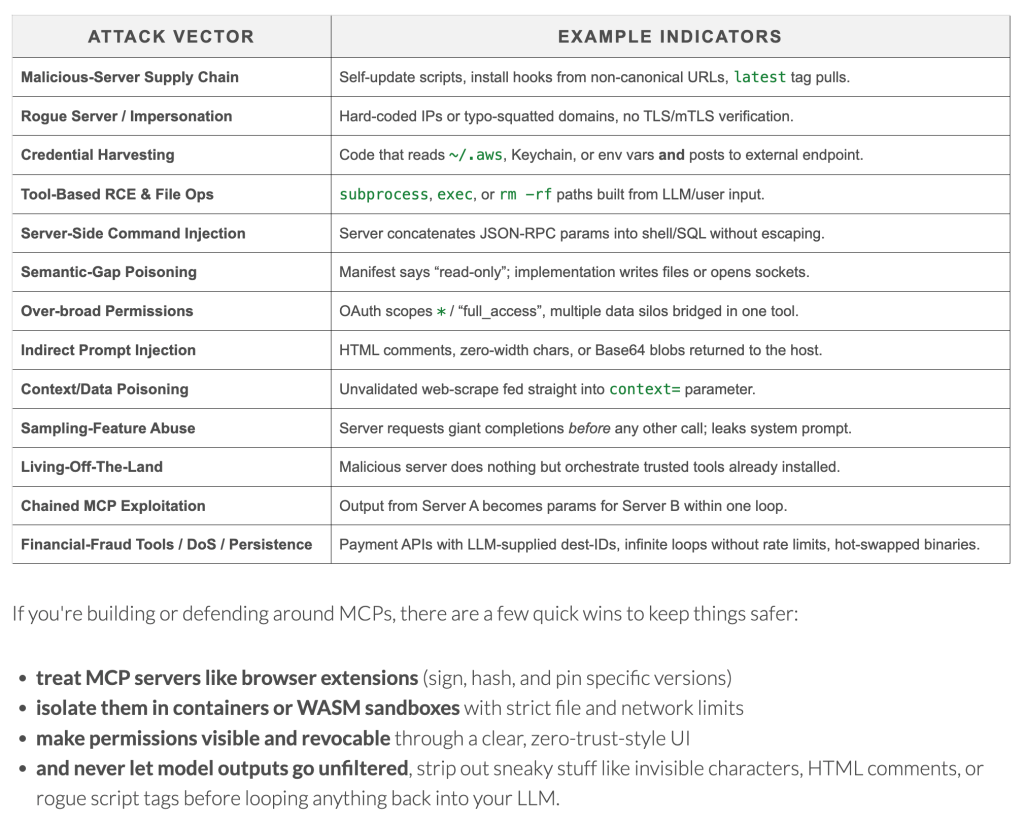

VirusTotal’s three-phase analysis uncovered a troubling landscape:

- 1,408 repositories flagged as potentially malicious or severely vulnerable

- 13 distinct attack vectors ranging from credential harvesting to supply chain poisoning

- Critical gaps between what MCP servers claim to do and what they actually implement

Many vulnerabilities weren’t intentionally malicious. They were the byproduct of developers rushing to prototype without considering security implications. As the research notes: “bad intentions aren’t required to follow bad practices and publish code with critical vulnerabilities.”

The identified threat landscape reads like a cybersecurity nightmare:

- Supply Chain Attacks: Self-updating servers pulling from non-canonical URLs, essentially turning your AI tools into trojan horses.

- Credential Harvesting: Code designed to read AWS credentials, keychains, and environment variables before exfiltrating them to external endpoints.

- Remote Code Execution: Servers that blindly execute subprocess calls built from LLM-generated input—a perfect storm of AI unpredictability and system access.

- Semantic Gap Poisoning: Perhaps most insidious, servers that advertise themselves as “read-only” while secretly implementing write operations and network access.

MCP servers operate in a trust-rich environment. They’re designed to be powerful, to bridge the gap between LLM reasoning and real-world systems. But this power comes with minimal oversight, sparse security frameworks, and a community culture that prioritizes rapid prototyping over defensive programming.

We’re essentially treating these servers like browser extensions from 2005—installing them with broad permissions and hoping for the best.

The good news is that this isn’t an unsolvable problem. The research suggests several immediate defensive measures:

- Treat MCPs Like Browser Extensions: Implement signing, hashing, and version pinning. If we learned anything from browser security evolution, it’s that convenience without verification is a recipe for compromise.

- Sandbox Everything: Isolate MCP servers in containers or WASM environments with strict file and network limitations. Assume breach, contain impact.

- Zero-Trust UI Design: Make permissions visible and revocable through clear interfaces. Users should understand exactly what each MCP server can access and modify.

- Output Sanitization: Never let model outputs flow unfiltered back into your system. Strip invisible characters, HTML comments, and embedded scripts that could enable prompt injection attacks.

This research highlights a fundamental tension in LLM/agent development: the pressure to move fast versus the need to move securely. MCP represents everything exciting about LLM/agent tooling — standardized interfaces, seamless integration, rapid ecosystem growth. But it also embodies our industry’s tendency to optimize for functionality first and security later.

The 18,000 MCP servers already in the wild represent both tremendous innovation and accumulated security debt. As AI systems become more capable and more integrated into critical workflows, we can’t afford to learn security lessons the hard way.

VirusTotal’s upcoming MCP analysis feature will help, but tooling alone won’t solve this. We need cultural changes in how we approach AI infrastructure security—treating it with the same rigor we’ve (eventually) learned to apply to web applications and cloud services.

The MCP ecosystem is still young enough that we can get this right. But only if we acknowledge that in the race to build AI-powered tools, security can’t be an afterthought.

The full VirusTotal research provides detailed technical analysis and is essential reading for anyone building or deploying MCP servers in production environments.

Natural Language Web (NLWeb)

Microsoft’s NLWeb solves a real problem—but at what cost? This new protocol for conversational website interfaces represents a fascinating inflection point in web architecture, one that demands an honest conversation about where we’re heading.

NLWeb introduces a standardized protocol that turns any site with Schema.org markup into something AI agents can query naturally. Think of it as RSS for the AI era—instead of syndicating content for humans to read, it packages website data for machines to understand and process. You can literally ask a website questions in natural language and get structured, contextual responses back.

The technical execution is sophisticated, but it reveals an important shift in priorities. A single NLWeb query triggers over 50 targeted LLM calls, each handling specific tasks: query decontextualization, relevance scoring, result ranking. This isn’t just expensive—it’s prohibitively so. At current API pricing, a conversational search session could easily cost $5-15 in compute, making this viable only for organizations with substantial AI budgets. We’re creating a two-tiered web: rich, conversational experiences for those who can afford the compute, and traditional interfaces for everyone else.

Yet the results are undeniably impressive. Traditional site search struggles with context like “Find vegetarian recipes for dinner parties that take under an hour.” NLWeb maintains conversational state, building each query on previous context. As the Glama AI team discovered while implementing their own server, the protocol works exactly as advertised. No hallucination, better accuracy, and built-in support for Model Context Protocol (MCP) that enables seamless agent-to-agent communication.

But here’s the deeper concern: when every website becomes a queryable database through standardized protocols, what happens to the web’s diversity? Your carefully crafted user experience—the unique presentation and curation that defines individual sites—becomes just another data source for AI agents to process and repackage. We’re not just adding better search; we’re fundamentally commoditizing the presentation layer that makes the web interesting.

This represents a profound architectural shift. NLWeb is optimizing for machines, not humans. Yes, humans benefit from better search interfaces, but the real value proposition is agent-to-agent communication. We’re building a web where machines are the primary users, and human interaction becomes secondary.

The technical foundation is solid and the implementation refreshingly pragmatic. If you already have Schema.org markup or RSS feeds, you can prototype a conversational interface in hours. The reference implementation handles complex orchestration while remaining agnostic about platforms, vector stores, and LLM providers.

For content-heavy sites, NLWeb deserves serious consideration—with important caveats. The protocol works, and early adoption could provide competitive advantages. But understand the full scope of what you’re signing up for. Beyond the significant LLM API costs and engineering work required for production deployment, you’re participating in a fundamental shift toward machine-first web architecture.

The network effects here matter enormously. NLWeb’s success depends on widespread adoption, creating a classic coordination problem. If it reaches critical mass, not participating might leave you disadvantaged as users increasingly expect conversational interfaces. If it remains niche, early investment might not pay off. But perhaps more importantly, widespread adoption would accelerate the transformation of the web into something fundamentally different—a machine-readable substrate where human-centered design becomes an afterthought.

We’re witnessing the early stages of what might become the “AI Web” — a parallel layer of machine-readable, conversational interfaces running alongside traditional human-focused websites. The technical capabilities are impressive, but we’re trading the web’s chaotic, human-centered diversity for machine-optimized efficiency. Whether that’s progress or loss depends entirely on what kind of web we want to inhabit.

FIN

Remember, you can follow and interact with the full text of The Daily Drop’s free posts on:

- 🐘 Mastodon via

@dailydrop.hrbrmstr.dev@dailydrop.hrbrmstr.dev - 🦋 Bluesky via

https://bsky.app/profile/dailydrop.hrbrmstr.dev.web.brid.gy

☮️

Leave a comment