Reader-LM Redux; AI Weirdness; The Many Frustrations Of LLMs; Space-Man

Today, I’m dunking on our AI overlords something fierce, which felt pretty darn good tbh.

TL;DR

(This is an AI-generated summary of today’s Drop using Ollama + llama 3.1b and a custom prompt.)

Both llama 3.1b and Perplexity failed to initially provide links, likely due to me being mean to LLMs in the first three sections. When I switched pplx to Claude+Sonnet, it worked fine.

- Reader-LM, a small language model by Jina AI for HTML-to-Markdown conversion, shows potential but is not yet ready for casual use, underperforming compared to traditional tools in practical tests (https://jina.ai/news/reader-lm-small-language-models-for-cleaning-and-converting-html-to-markdown/?nocache=1)

- Janelle Shane’s “New AI Paint Colors” experiment demonstrates the evolution of AI in generating paint color names, highlighting both improvements and persistent challenges in AI’s creative capabilities (https://www.aiweirdness.com/new-ai-paint-colors/)

- Jon Evans’ article “The Many Frustrations of LLMs” explores challenges when working with large language models, including inconsistency, freakouts, fustian language, and fabrications (https://aiascendant.substack.com/p/the-many-frustrations-of-llms)

- Space-Man, a game and showcase built using OpenAI’s o1-preview and other AI models, demonstrates practical applications of recent AI technologies (https://picatz.github.io/space-man/)

Reader-LM Redux

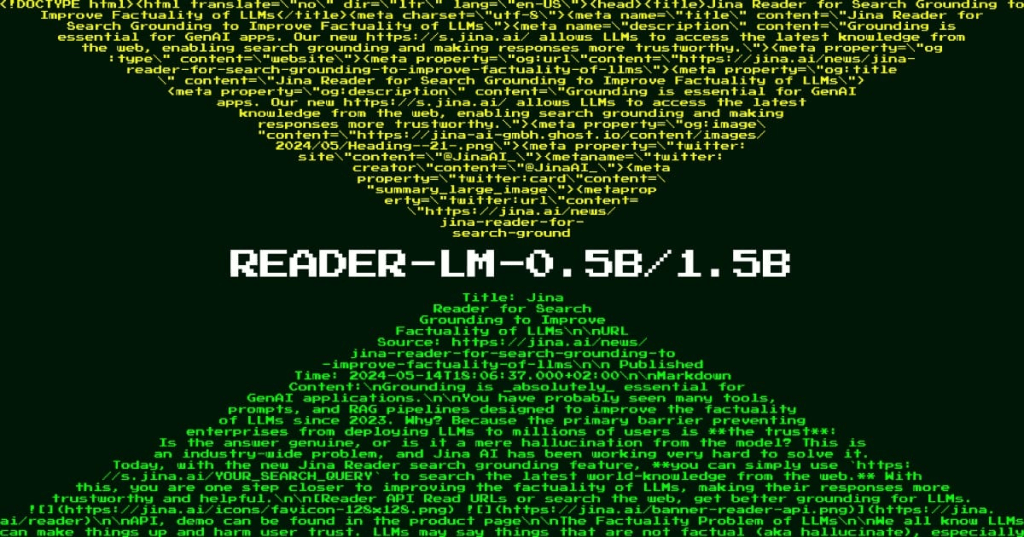

We’ve covered Jina AI-related topics a few times before, and I 💙 the simplicity and elegance of their https://r.jina.ai/ HTML-to-Markdown free API. So, when I heard about Reader-LM, I was pretty excited. I’m a big fan of small language models, and was eager to try out this neat approach to the HTML-to-Markdown problem space.

So as not to bury the lede, the TL;DR is that it’s not ready for casual/general use. Jump to near the end of this section to the “👉” if you just want to find out why. The bits below this paragraph are primarily a summary of “what” and “how” for these new models.

Reader-LM is an small language model — with lots of potential — created by Jina AI, designed specifically for data extraction and cleaning on the open web. Its primary focus is to convert raw, noisy HTML into clean markdown, with an emphasis on cost-efficiency and practical usability.

The task of converting HTML to Markdown is largely a “selective-copy” task. This means that the model needs to extract content while ignoring unnecessary HTML elements like sidebars, headers, and footers. The relative simplicity of this task suggests that a smaller language model may be sufficient, unlike tasks typically handled by larger language models. Additionally, given the size and complexity of modern HTML, which often includes embedded CSS and scripts, Reader-LM supports long context lengths, up to 256K tokens, ensuring practical performance.

Two versions of the model have been developed: reader-lm-0.5b and reader-lm-1.5b. Both are multilingual and have been trained to directly generate clean markdown from noisy HTML. Despite their smaller size, they outperform larger language models while being only a fraction of their size.

Training data for Reader-LM was generated through the Jina Reader API, responsible for converting HTML to markdown. Additional data, including synthetic HTML and markdown pairs generated by GPT-4o, were also included, resulting in a total training set of 2.5 billion tokens. The model was trained in two stages: a short-and-simple HTML phase (32K tokens) and a long-and-hard HTML phase (128K tokens), using a zigzag-ring-attention mechanism for the latter stage.

In quantitative evaluations, Reader-LM outperformed larger models in key metrics such as ROUGE-L, Token Error Rate (the rate at which the generated markdown tokens do not appear in the original HTML content), and Word Error Rate (WER). A qualitative study further showed that Reader-LM-1.5B consistently excelled in tasks related to structure preservation and Markdown syntax usage.

To combat issues like repetition and looping, Reader-LM incorporated contrastive search and a simple repetition stop criterion. Training efficiency was improved through chunk-wise model forwarding and enhanced data packing, which helped address out-of-memory errors and optimize long input processing.

The team also experimented with an encoder-only model, but it encountered practical difficulties such as sparse labels and challenges in encoding Markdown syntax. Due to the complexity of constructing the necessary training data, this approach was ultimately abandoned.

👉 These models were Ollama ready, so I did an ollama pull reader-lm and decided to test it out on what, I thought, would be a slam-dunk (given the model’s write-up).

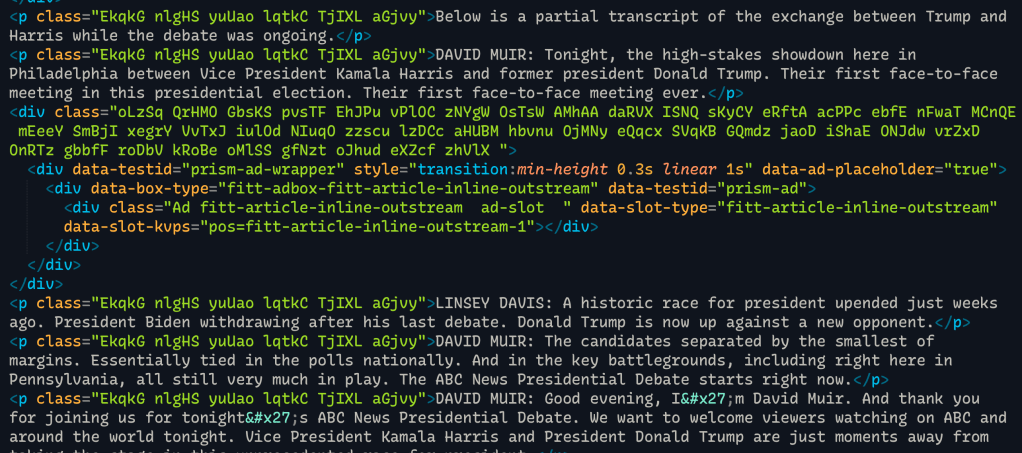

The morning after this week’s POTUS debate, I used html2md to convert ABC’s HTML transcript to markdown. It did a great job, as did the non-AI-powered Jina AI API for the same operation https://r.jina.ai/https://6abc.com/read-harris-trump-presidential-debate-transcript/15289001/?ex_cid=TA_WPVI_TW&taid=66e17135f97ea600019d0d2f.

The HTML is a bit ugly, but the textual content is mostly well-structured:

Given how easy it was for the traditional tools to extract the salient parts (without any tweaking on my part), I figured it’d be a slam dunk for these new models, and was set to just show timing benchmarks.

Sadly, it completely failed to extract anything meaningful at all from the HTML on my local MacBook Pro with just a ollama run reader-lm < /tmp/transcript.html, which used the 1.5B version of the model.

I initially thought that result might be due to quanitzation, so I forked the Google Collab notebook and used their “real” GPUs with Jina’s own Python code. (Fork that and restart the session to try it on your own — it’s on the free tier). I was a bit dismayed that they had code to strip out various HTML bits, but continued on, and — after “grab a beverage” amount of time — it did extract some of the transcript (you need to tweak the output token size to get it all).

Since it did, indeed work, I abandoned the CLI and wrote some R code to clean the input HTML and use my local Ollama API, which lets me change the model parameters to those of the ones in the Collab notebook:

input <- rvest::read_html("/path/to/saved/html/file")

# Why the Jina folks used regular expression search/replace

# to edit HTML I will never know.

rvest::html_nodes(

input,

xpath = "//*[self::script or self::svg or self::style or self::img or self::meta or self::link] | //comment()"

) |>

xml2::xml_remove()

input_trimmed <- as.character(input)

httr::POST(

url = "http://localhost:11434/api/generate",

httr::accept_json(),

httr::content_type_json(),

encode = "json",

body = list(

model = "reader-lm",

prompt = input_trimmed,

stream = FALSE,

keep_alive = "30m",

options = list(

top_k = 1,

temperature = 0,

repeat_penalty = 1.08,

presence_penalty = 0.25,

num_predict = 2048,

num_ctx = 256000

)

)

) -> res

httr::status_code(res)

httr::content(res, as = "text") |>

jsonlite::fromJSON() -> x

It took ~5 minutes and did result in the same (truncated, due to the output token size) output as the Collab notebook. I used hyperfine to run the equivalent html2md command, which resulted in:

| Command | Mean [ms] | Min [ms] | Max [ms] | Relative |

|---|---|---|---|---|

html2md --in /tmp/input | 28.0 ± 0.8 | 26.6 | 30.1 | 1.00 |

The more traditional tool took far less time, consumed far fewer resources, and produced excellent output.

I’m still excited about the potential of small language models in general, but I’ll be reclaiming the storage space taken up by this fairly useless model in short order.

AI Weirdness

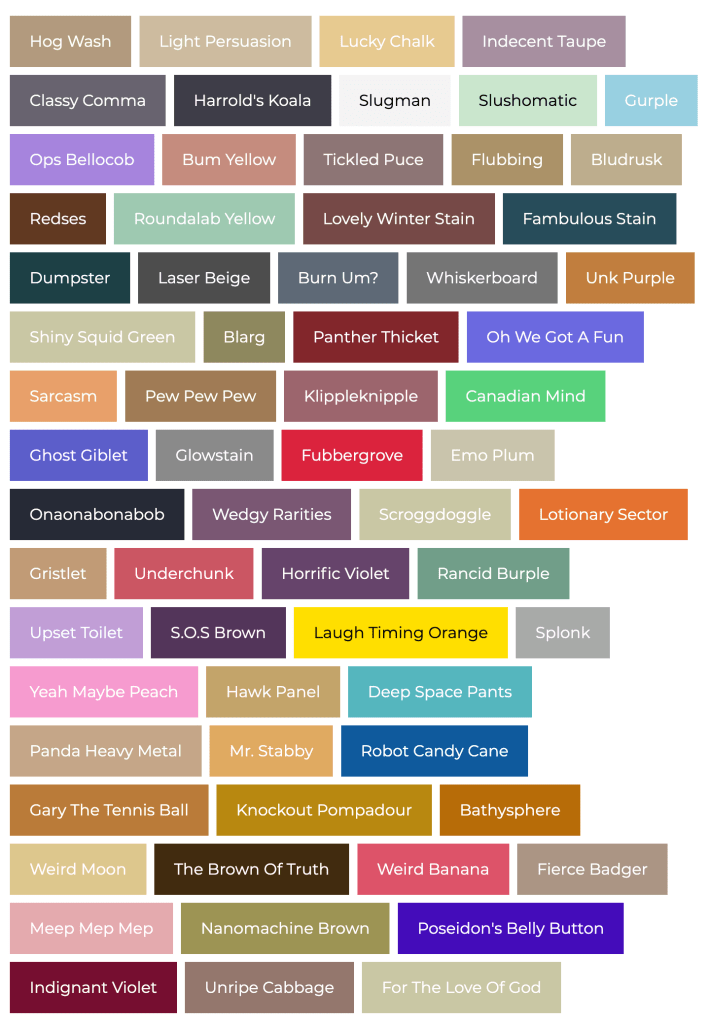

“New AI Paint Colors” is a post by Janelle Shane which explores the use of neural networks to generate and name [paint] colors. Janelle’s goal was to show AI has evolved in this specific creative domain, and draw attention to both its successes and challenges.

Shane began by conducting early experiments, where she trained a neural network from scratch using lists of colors found online. Despite the neural net having no prior training in English or any other language, it managed to reproduce some letter combinations from the original data. However, it lacked context, resulting in nonsensical and impractical color names.

When more advanced models like GPT-3 (ah, the good old days?) were introduced, they significantly improved the ability to produce more coherent and contextually appropriate color names and RGB values. In those experiments, both DaVinci and Ada versions of the model were used. DaVinci generally adhered to the RGB color format, but Ada sometimes generated values that fell outside the standard 0-255 range for RGB coordinates (rude!).

Some of the examples in the post are pretty amusing (in a bad way). One such color, “You Must Be Above This,” included an invalid green coordinate of 311, while “Blobsday” had an excessive red value. “Painted Batman” was described as 8.5 times redder than a computer can display, and “Starbat” had four color coordinates instead of the usual three.

While these models do clearly demonstrate impressive pattern recognition and “creativity” (I dislike using words like that, but Janelle uses them, so 🤷🏼), it can still make mistakes, such as producing the aforementioned impossible RGB values. Fine tuning with more focused training data will most certainly improve the results. But, there was enough color theory training data in the core models to make the token predictions align with rudimentary color theory principles (I can only imagine the volume of hex strings from codebases and design templates that made their way into the corpus).

Shane emphasizes that AI’s ability to generate paint colors and contribute to artistic creations offers exciting possibilities for innovation. At the same time, it raises questions about the role of AI in creative processes and the potential for AI to assist or even replace human artists in certain tasks.

Even if you’re not interested in generating palettes + names yourself, it’s worth a look just to see how the experiments turned out.

The Many Frustrations Of LLMs

Jon Evans wrote a (at least for me) very cathartic piece on “The Many Frustrations Of LLMs” in a recent edition of Gradient Ascendant. His goal was to explore the challenges and inconsistencies that arise when working with large language models, and he highlights several key frustrations that one can encounter and discusses strategies for managing these issues in $WORK settings.

One of the core problems with LLMs is consistency. Unlike traditional software, which is designed to be predictable and reliable, LLMs often behave unpredictably and are highly sensitive to slight variations in input. This lack of predictability leads to phenomena like Heisenbugs (such a great term!), where outputs appear randomly inconsistent, making it difficult to rely on LLMs for repeatable results.

Among the specific frustrations, “freakouts” occur when LLMs fail at a structural level. This can be something as fundamental as missing newlines, broken formatting, or syntax issues. Additionally, each new version of a model can introduce new problems with fidelity, requiring prompts to be adjusted or rewritten. Another common issue is fustianism (if that URL link expires paste ‘fustian’ into https://www.wordsapi.com/), where LLMs tend to produce verbose or pretentious language, though this can sometimes be mitigated with precise prompting.

LLMs may also refuse to engage with culturally sensitive topics, a result of refutations caused by alignment techniques like Reinforcement Learning from Human Feedback (RLHF). They also struggle with following negative instructions, often requiring multiple iterations to achieve the desired outcome. Their performance can degrade during context switching, as they have limited “cognitive bandwidth,” a problem the article refers to as “figuring”. General purpose LLMs are notoriously weak at mathematics and often require external tools to handle calculations, summarized under the frustration of “figures”.

Another point of concern noted by Jon is “fixations”, where LLMs develop biases, such as an overemphasis on error handling in code analysis. LLMs are also, generally, poor at processing time and dates correctly, often needing clear formatting to avoid misunderstandings. Perhaps most frustrating of all is their tendency toward outright fabrications, which can arise from low-quality data or improper use of Retrieval-Augmented Generation (RAG) systems.

It’s a great read, and something to keep in your bookmarks the next time some “super smart exec” tells you to “AI ALL THE THINGS!”.

Space-Man

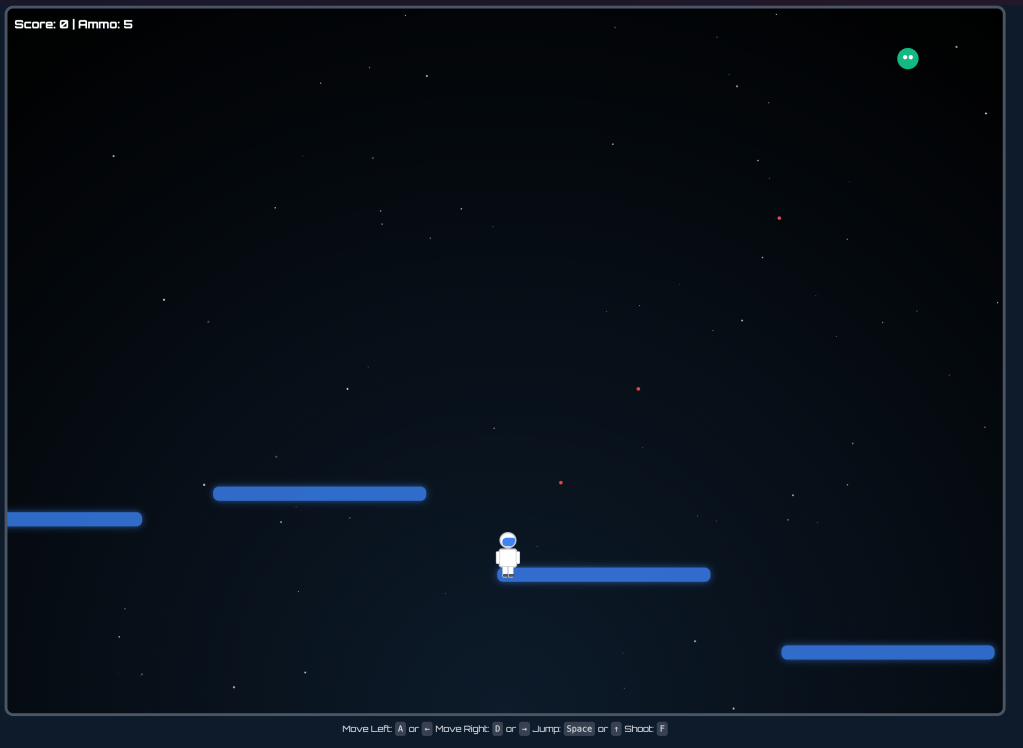

As promised, there’s a fourth section, today, and it’s both a game and a showcase of something that was built with OpenAI’s brand new o1-preview (plus some help from gpt-4o, o1-mini and Claude 3.5 Sonnet).

FIN

Remember, you can follow and interact with the full text of The Daily Drop’s free posts on Mastodon via @dailydrop.hrbrmstr.dev@dailydrop.hrbrmstr.dev ☮️

Leave a comment