Intel One Mono; Gosh I Gotta Work On That Acronym; Chomsky’s Downfall

Happy Mater’s Day to all who celebrate!

Today, we let you put your newfound design skills to work, introduce you to some projects created by a very clever human, and leave you with a thought-provoking defense of LLMs that may have even caused even me to lighten up a bit.

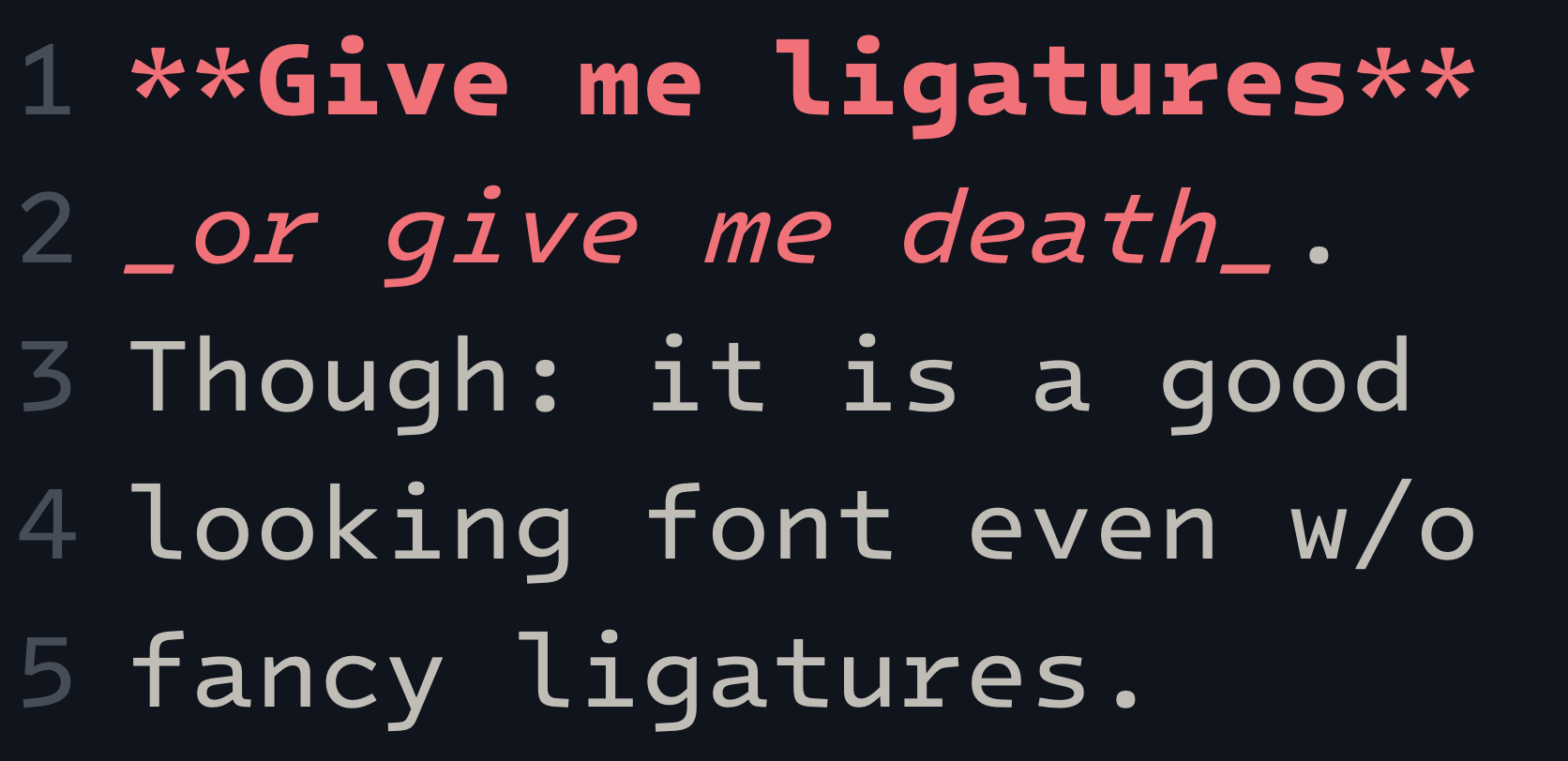

Intel One Mono

Friday’s WPE was a tad font-heavy, and I hope you did practice a bit, since you’re going to have a chance to play the role of a bona fide typographer in this first section.

Intel One Mono is a new monospaced font designed by Intel’s brand team to improve readability for developers. The font aims to reduce eyestrain and coding errors by being more legible. Glyphs cover over 200 languages and comes in Light, Regular, Medium, and Bold weights with matching italics. The font files can be used on both desktop and mobile apps. The OpenType features include a raised colon option, language support features, and superior/subscript numerals. Editable UFO font sources are provided for those who want to customize the font.

Let’s look at the features in a bit more detail.

Outside the default characters, there are a few extra features that are accessible in some applications, as well as via CSS:

raised colon: there is an option for a raised colon, either applied contextually between numbers or activated generally. The contextual option is available via

ss11(Stylistic Set #11), or usess12(Stylistic Set #12) orsalt(Stylistic Alternates) for the global switch.language support:

ccmp,markandloclfeatures ensure correct display across a wide range of languages. These are usually activated by default. We recommend setting the language tag/setting in your software to the desired language for best results.superior/superscript and inferior/subscript figures are included via their Unicode codepoints, or you can produce them from the default figures via the

sups(Superscript),subs(Subscript), andsi(Scientific Inferior) features.fraction numerals are similarly available via the

numr(Numerator) anddnom(Denominator) features. A set of premade fractions is also available in the fonts.

As you peruse the repo, your eyes do not deceive you: you are really seeing UFOs. 🛸

No, not little green 👽, but unified font objects (GH). This fancy sounding name is just a tag for a directory structure containing a certain set of files.

*.ufo

├──metainfo.plist

├── fontinfo.plist

├── groups.plist

├── kerning.plist

├── lib.plist

├── layercontents.plist

├── glyphs

│ ├──contents.plist

│ ├──layerinfo.plist

│ └──*.glif

├── images

│ └──*.png

└── data

Each of the files and directories have unique meanings, purposes, and data structures:

metainfo.plist: Format version, creator, etc.fontinfo.plist: Various font info data.groups.plist: Glyph group definitions.kerning.plist: Kerning data.features.fea: OpenType feature definitions.lib.plist: Arbitrary custom data.layercontents.plist: Glyphs directory name to layer name mapping.glyphs*: A directory containing a glyph set representing a layer.glyphs*/contents.plist: File name to glyph name mapping.glyphs*/layerinfo.plist: Information about the layer.glyphs*/*.:glif: A glyph definition.images: A directory containing images referenced by glyphs.data: Arbitrary custom data in a quantity or structure that can’t be stored in lib.plist.

What does this have to do with “design” practice in Friday’s WPE?

Well, there is a plethora of resources you can use to customize this 🛸 font. Which means you have a chance to apply what you’ve learned, and see if there might be a hidden, glyph-master in you.

As noted in the section header, the font is spiffy in current form, but if you just happen to ligature-ify a version of it, a certain friendly neighborhood hrbrmstr wouldn’t say “no” to a link.

Gosh I Gotta Work On That Acronym

I’ve been put into a bit of a “GraphQL hades” at work. I don’t think graphql solves…well…any real problem. All it does is toss yet another file format into the universe, add needless complexity.

In my search for some graphql assistance, I came across a highly coincidentally (in relation to the Drops from this week) named repo. It sports a small and unobtrusive framework for building JSON-based web APIs on REST or GraphQL-based architectures. It is designed to be easy and quick to get started with, while also being flexible enough to allow for more complex use cases. Some of the features of BARF (basically a remarkable framework — which smells like another backronym) include custom configuration, request logging, and panic recovery

While there are scads of web server frameworks available, what makes BARF different is its focus on simplicity, ease, and core focus. It is not a full-fledged web application framework like Django or Express, but rather “just” a lightweight solution for said creation of REST/GraphQL-based APIs.

To get a better understanding of how BARF compares to other web server frameworks, you can refer to the following resources:

While you’re there, please take a moment to poke around the other repos created/maintained by opensaucerer. They are a very clever and talented human that you’ll want to keep an eye on.

Chomsky’s Downfall

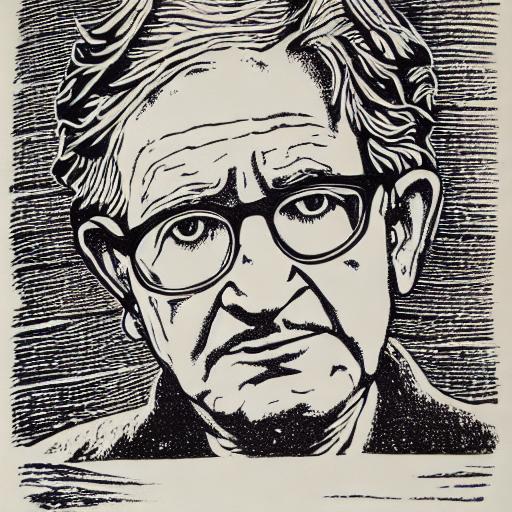

Steven Piantadosi is a professor at UC Berkeley in psychology and neuroscience, where he heads the computation and language lab.

Back in March, Steven dropped a pretty serious paper, titled: Modern language models refute Chomsky’s approach to language.

Here’s the abstract:

The rise and success of large language models undermines virtually every strong claim for the innateness of language that has been proposed by generative linguistics. Modern machine learning has subverted and bypassed the entire theoretical framework of Chomsky’s approach, including its core claims to particular insights, principles, structures, and processes. I describe the sense in which modern language models implement genuine theories of language, including representations of syntactic and semantic structure. I highlight the relationship between contemporary models and prior approaches in linguistics, namely those based on gradient computations and memorized constructions. I also respond to several critiques of large language models, including claims that they can’t answer “why” questions, and skepticism that they are informative about real life acquisition. Most notably, large language models have attained remarkable success at discovering grammar without using any of the methods that some in linguistics insisted were necessary for a science of language to progress.

It’s hard to argue that LLMs have achieved “remarkable” success in generating grammatical text, stories, and explanations. They are trained simply on predicting the next word in a text, yet can produce novel and coherent outputs.

These models undermine Chomsky’s generative linguistics approach in several ways:

they succeed without using any of the methods that Chomsky claimed were necessary for a science of language, such as innate principles and structures.

the models implement their own theories of language through the parameters they learn from data. Their representations capture aspects of syntax and semantics.

the models integrate various computational approaches to language, like constructions and constraints, in a way that was not envisioned by generative linguistics.

the models show that it is possible to discover hierarchy, syntax, and semantics simply from text prediction, contradicting Chomsky’s claims.

while the models are imperfect, they represent a significant scientific advance by demonstrating that text prediction can provide enough of a learning signal to acquire many aspects of grammar and language use.

I’ll leave the rest of the analysis to you. But — fair warning — I went into the paper with an open mind, and said mind has been altered (for the better) a bit, in light of Steven’s defenses of many of his posits.

FIN

Sending out good thoughts to all those missing a “mom” today and all “moms” who have endured the loss – in any capacity — of a treasured loved one in their care. 💙 💛 ☮

Leave a comment